Before going further with GTID based replication let’s have quick look over coordinate replication and understand the need of GTID based replication !!!

How Coordinate Replication Works??

1. Master server enables binary log

2. Client commits query to the master

3. Master executes the query and commits it

4. Master stores the query in the binary log as en event

5. Master returns to the client with commit confirmation

6. Slave server configures replication

mysql> CHANGE MASTER TO MASTER_HOST='192.168.56.110',MASTER_USER='repl',

MASTER_PASSWORD='password',MASTER_LOG_FILE='mysql-bin.000001', MASTER_LOG_POS= 107;

7. Replication starts mysql> START SLAVE;

8. IO thread starts and initiates dump thread on the master

9. Dump thread reads events from binary log

10. Dump thread sends events to IO thread from the slave

11. IO thread writes events into relay log

12. IO thread updates master.info file parameters

13. SQL thread reads relay log events

14. SQL thread executes events on the slave

15. SQL thread updates relay-log.info file

HA and Coordinate Replication

Coordinate based replication is great – it is easy to setup

Coordinate based replication is bad – it is difficult to failover

- When the master fails, the slaves are ready to replace it

- However, the process of failure detection and acting upon in case of multiple servers requires significant DBA intervention

- Difficult to follow changes through a complex replication stream that go to multiple servers

If every transaction has its own globally unique identifier (GTID), it becomes a lot easier to track changes

Advantages?

- It is possible to identify a transaction uniquely across the replication servers.

- Make the automation of failover process much easier. There is no need to do calculations, inspect the binary log and so on. Just execute the command MASTER_AUTO_POSITION=1.

- At application level it is easier to do WRITE/READ split. After a write on the MASTER you have a GTID so just check if that GTID has been executed on the SLAVE that you use for reads.

- Development of new automation tools isn’t a pain now.

Drawbacks?

- Additional complexity

- Incompatibility with existing solution – coordinate based replication

What is GTID??

GTID Replication Basics

- Each server has binary log (master and slave)

- GTIDs are written into binary log

- GTIDs executed on a server contained in a new, read-only, global server variable GTID_EXECUTED

- GTID_EXECUTED holds the range of GTIDs committed on this server as a string

How to Configure GTID Replication??

STEP 1: Edit my.cnf with new additional parameters

gtid_mode

- It could be ON or OFF (not 1 or 0)

- It enables the GTID on the server

- Enables binary logs

- Mandatory to create a replication

- Slave servers must log its changes

- Needed for server promotion/demotion

- Forces the server to be safe by using only transactional tables

- Non-transactional statements are denied by the server.

STEP 2: New replication configuration command

slave> CHANGE MASTER TO MASTER_HOST=’node1',

MASTER_USER=’root',

MASTER_PASSWORD=’google@123',

MASTER_AUTO_POSITION=1;

Ahh!! Enough theory we had I guess, let’s Have Practical implementation

now J :

Below are the high level Steps for GTID based "master-master" replication setup

- Enable GTID each nodes

- Create replication users on each nodes

- Take the backup from master and restore on slave servers ( Skip this step if both servers are new / fresh installation )

- Verify the GTID parameters

- Configure the replication with GTID in place.

STEP 1: Enable GTID each nodes

On Node1: Edit you /etc/my.cnf to add GTID parameters followed by restart

On Node2: Edit your /etc/my.cnf to add GTID parameters followed by restart

STEP 2: Create replication users on each nodes

This would be the same user which we have created in coordinate based replication(previous blog). I am just pasting the command. Those who are doing fresh setup need to do on both nodes.

GRANT REPLICATION SLAVE ON *.* TO 'repl'@'%' IDENTIFIED BY 'google@123';

STEP 3: Take the backup from master and restore on slave servers ( Skip this step if both servers are new / fresh installation )

Follow blog(backup/restore using MEB 3.12)

Backup the master instance

$ mysqlbackup -uroot -pgoogle@123 --datadir=/var/lib/mysql --backup_dir=/tmp/ --with-timestamp backup-and-apply-log

Restore the backup on Slave node

$ mysqlbackup --defaults-file=/etc/my.cnf --datadir=/var/lib/mysql/ --backup-dir=/tmp/2017-02-27_14-51-33 copy-back-and-apply-log

STEP 4: Verify the GTID parameters on both nodes

show global variables like '%gtid%';

STEP 5: Configure the replication with GTID in place. Skip stop and reset slave for new replication setup.

I reset it because we have already coordinate replication in place configured in previous blog.

show global variables like '%gtid%';

STEP 5: Configure the replication with GTID in place. Skip stop and reset slave for new replication setup.

I reset it because we have already coordinate replication in place configured in previous blog.

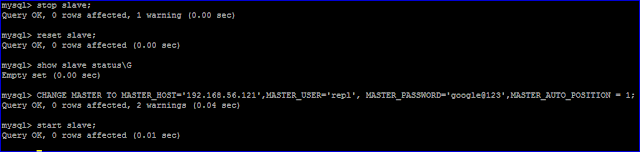

On Node1:

stop slave;

reset slave;

show slave status\G

CHANGE MASTER TO MASTER_HOST='192.168.56.121',MASTER_USER='repl', MASTER_PASSWORD='google@123',MASTER_AUTO_POSITION = 1;

start slave;

On Node2:

stop slave;

reset slave;

show slave status\G

CHANGE MASTER TO MASTER_HOST='192.168.56.110',MASTER_USER='repl', MASTER_PASSWORD='google@123',MASTER_AUTO_POSITION = 1;

start slave;